Understanding Thrashing and How to Handle It

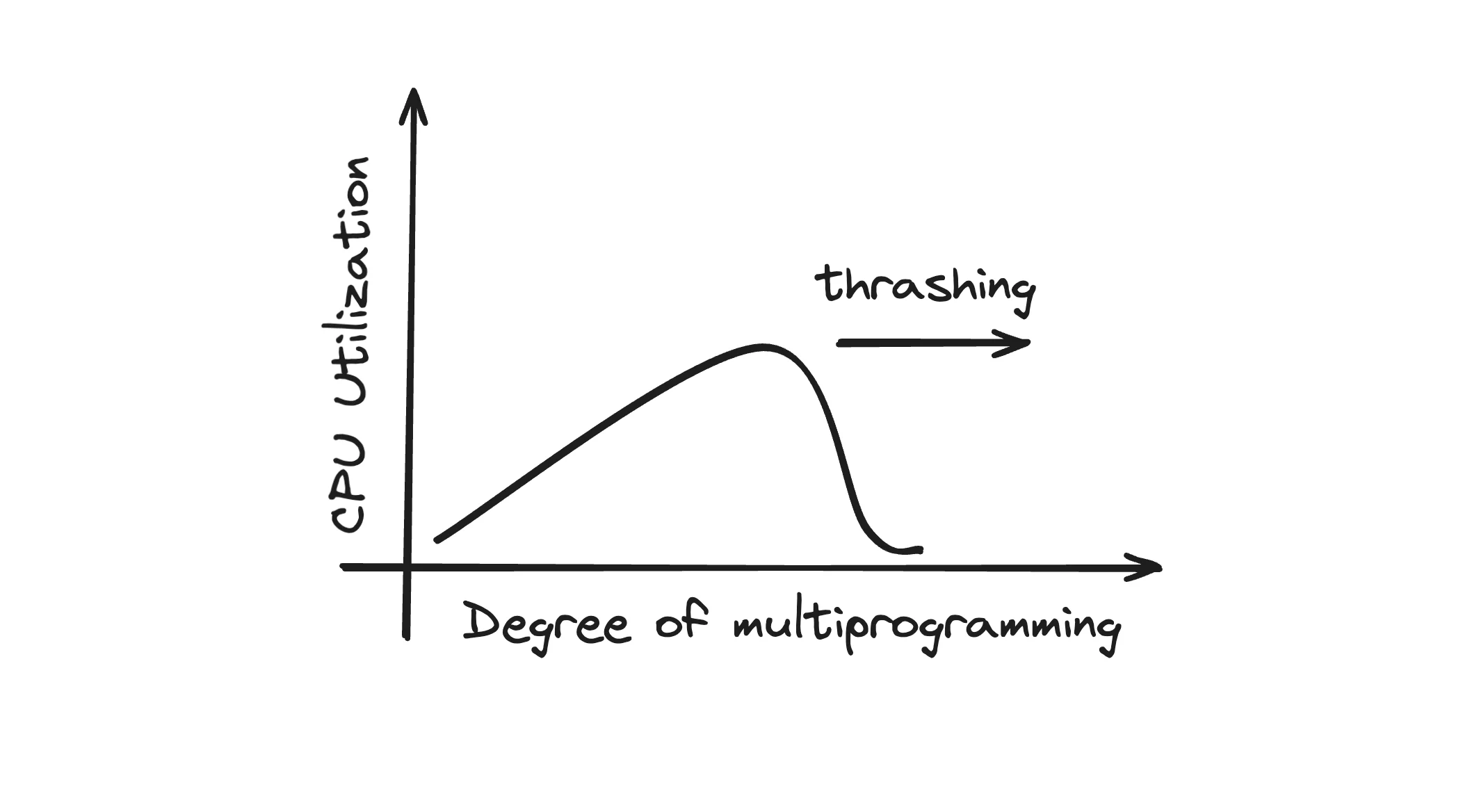

Thrashing

Thrashing is a condition where the computer's virtual memory subsystem is overused to the point where it spends more time swapping pages in and out of memory than executing actual processes.

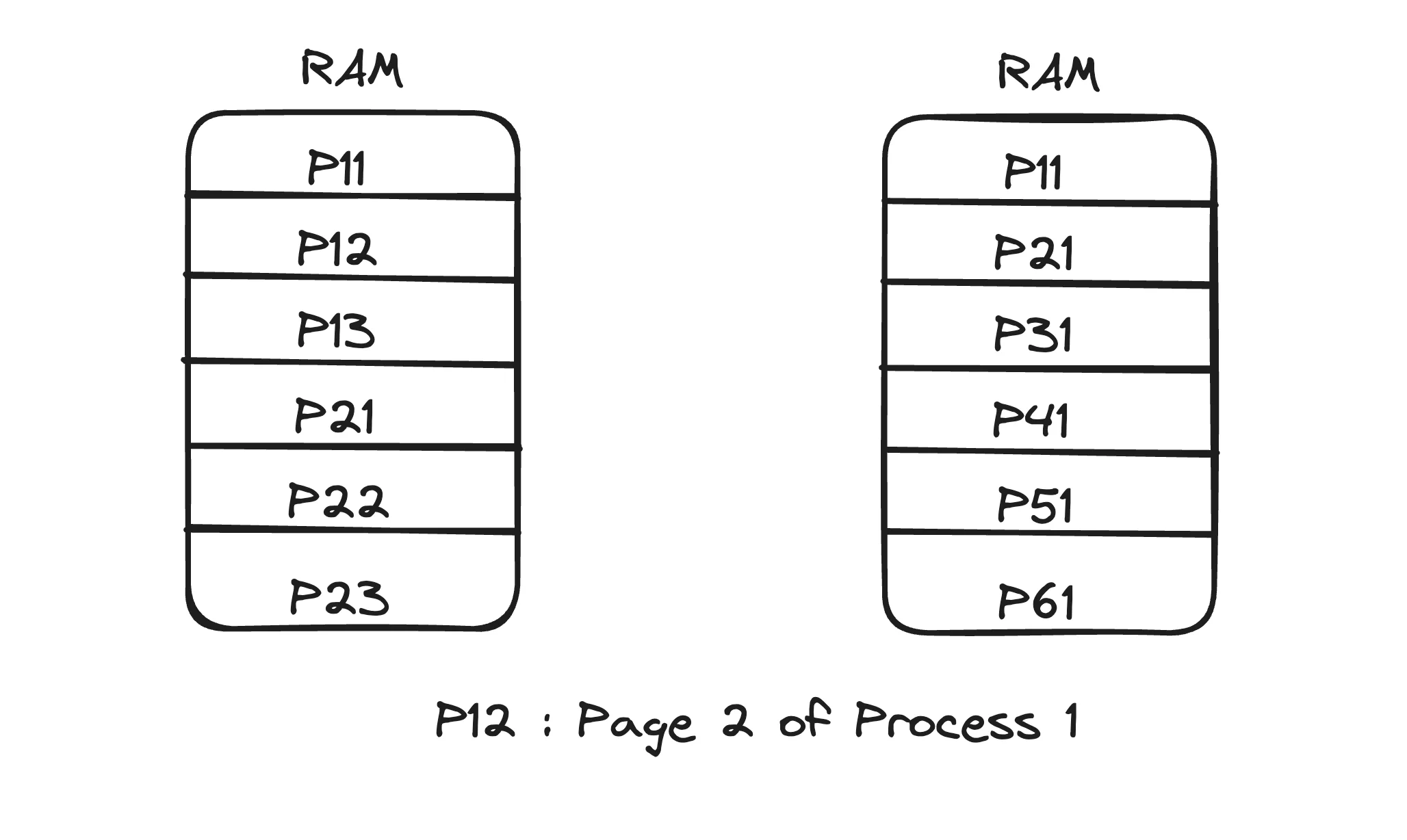

Scenario 1

Imagine there are two processes, P1 and P2, each with three pages loaded into memory. When executing a function in P1, it starts with page1, which is already loaded in memory. Then, it accesses page2 and page3, both of which are also loaded, resulting in no page faults. However, when P1 attempts to access page4, a page fault occurs as this page is not in memory.

Observation: Even with a low degree of multiprogramming (few processes), thrashing does not occur here because the pages that are needed are mostly present in memory, resulting in fewer page faults.

Scenario 2

In this case, there are six processes (P1, P2, P3, P4, P5, P6) with only one page from each process loaded into memory. As a function from P1 starts executing, it uses page1 but soon requires page2, resulting in a page fault. This trend continues for other processes.

Observation: The system spends more time handling page faults than executing processes. Despite a high degree of multiprogramming, thrashing occurs because each process frequently requires pages that are not loaded, leading to excessive swapping.

Handling Thrashing

Working Set Model

-

Concept: The working set model attempts to maintain a set of pages that a process is actively using, known as its working set, and also takes into account the locality of reference (nearby pages that might soon be needed).

-

Implementation: By ensuring that a process's working set is entirely loaded into memory, the system can minimize page faults. Allocating enough frames to accommodate the working set of each process helps prevent thrashing.

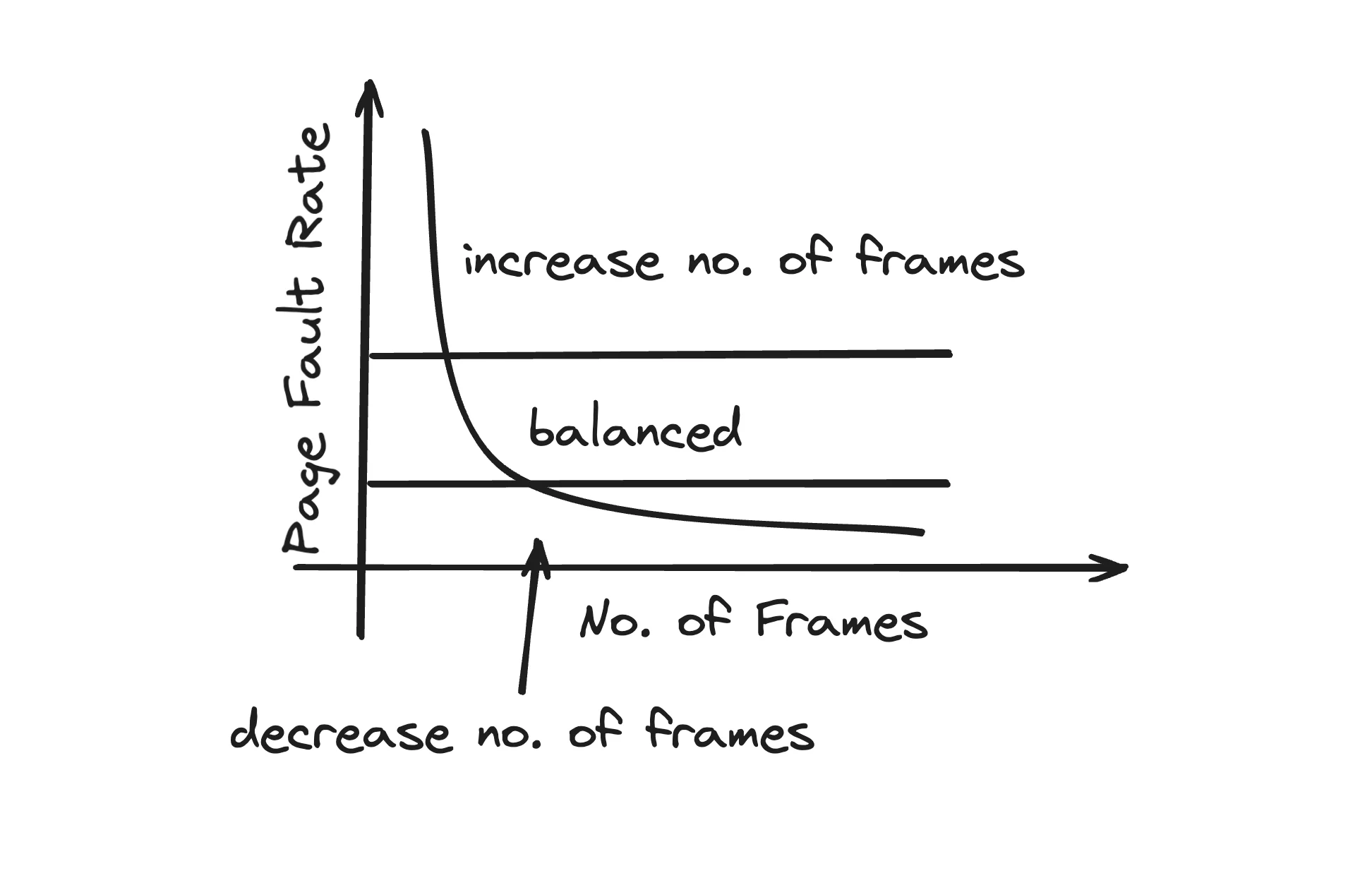

Page-Fault Frequency (PFF)

-

Concept: This method monitors the rate at which page faults occur for each process.

-

Implementation:

-

If a process experiences a high page-fault rate, it indicates that the process does not have enough pages in memory. The system should allocate more frames to this process to reduce the page faults.

-

Conversely, if the page-fault rate is low, the system can allocate fewer frames to this process, potentially allowing those frames to be used by other processes.

-

By adjusting the number of frames allocated based on the page-fault rate, the system can maintain a balance. If the page-fault rate is too low, it may mean that only a few processes are running with many pages, which could affect the degree of multiprogramming. Therefore, balancing frame allocation ensures that processes can run efficiently without causing thrashing.