HTTP

All the versions follow the basics of HTTP.

HTTP (HyperText Transfer Protocol) is the protocol that powers the web, enabling communication between clients (like browsers) and servers. Over the years, different versions of HTTP have been developed, each improving on the last to enhance performance, efficiency, and security.

HTTP 1.0

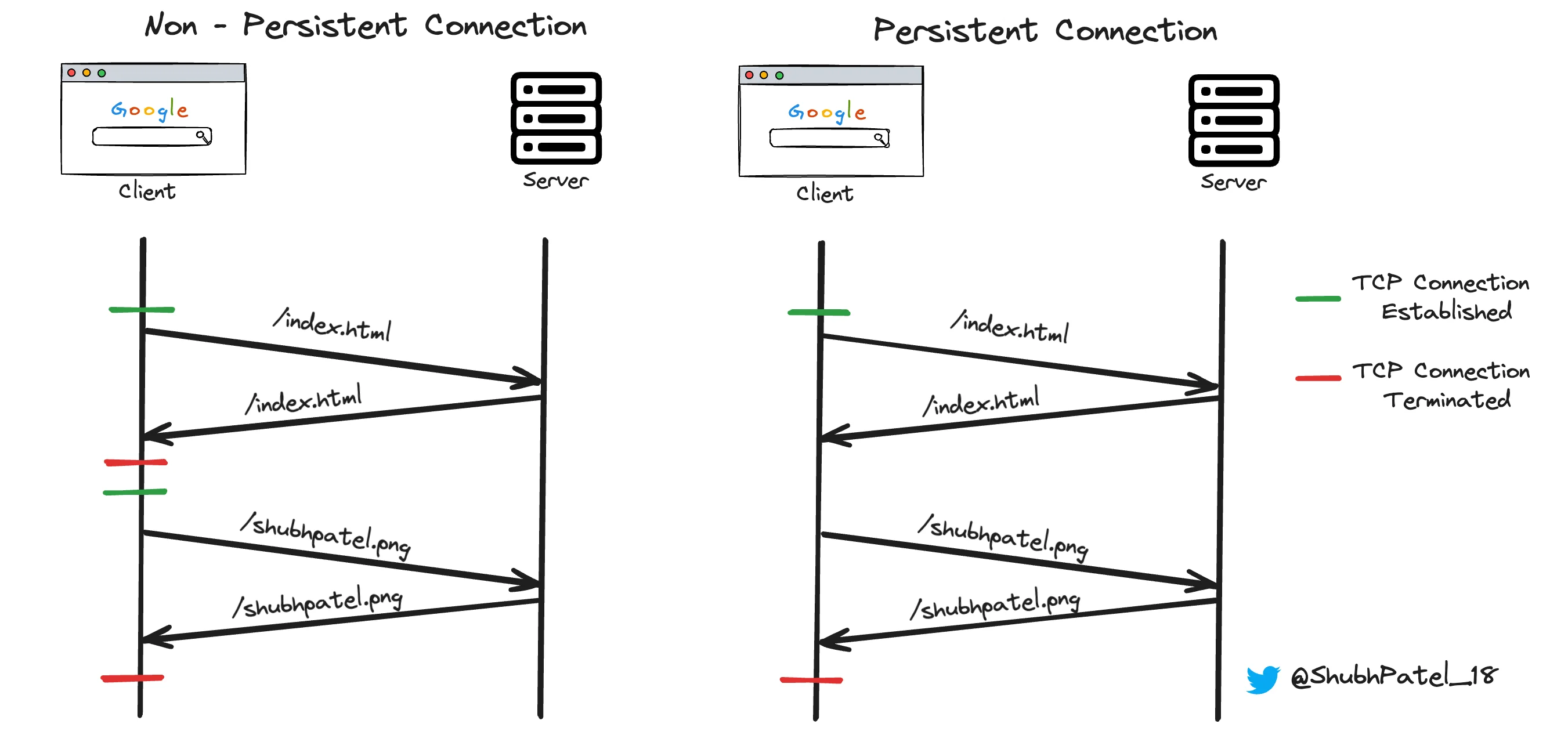

Persistent vs Non Persistent Connection

Non-Persistent Connection

In HTTP 1.0, each request for a resource required a separate TCP connection. This means if you requested multiple resources from a server (like images, scripts, and stylesheets on a webpage), each one needed a new connection. This created significant overhead due to the time required to establish and close each connection, which affected performance.

HTTP 1.1

HTTP 1.1 introduced several improvements to address the limitations of HTTP 1.0.

Persistent Connection

In HTTP 1.1, persistent connections (also known as keep-alive connections) allow the same TCP connection to be used for multiple HTTP requests and responses. This means that after the initial connection is established, it can be reused for subsequent requests, reducing the overhead of establishing new connections.

-

Advantage: This feature reduces latency and improves performance by minimizing the number of connections that need to be opened and closed.

-

Drawback: The server needs to maintain the TCP connection until it is explicitly closed or until its Time to Live (TTL) expires, which consumes memory.

There can be at most 6 TCP connections established simultaneously. Therefore, it's better to have a lower TTL to optimize resource usage.

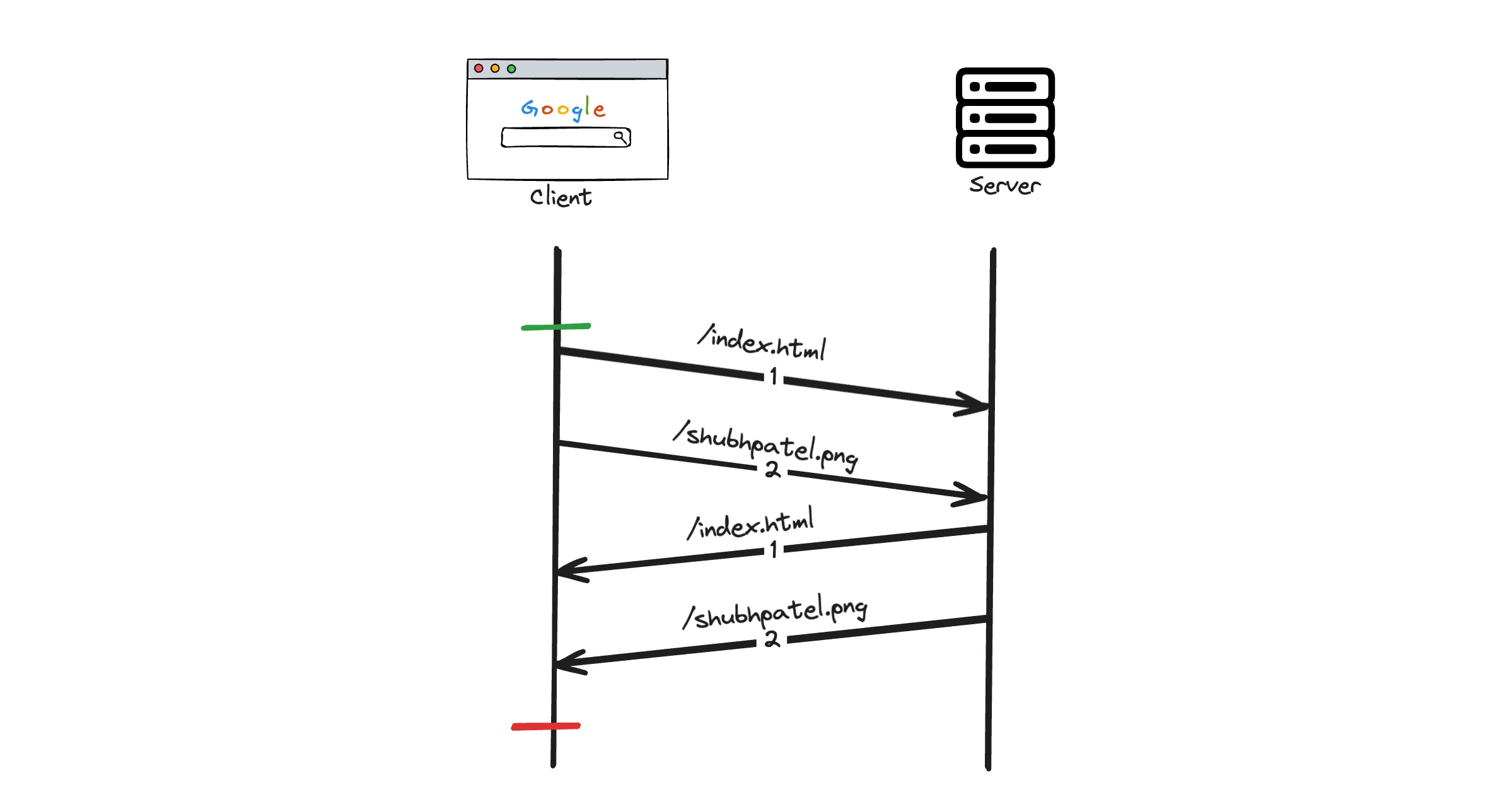

Pipelining

Pipelining

Pipelining allows clients to send multiple HTTP requests without waiting for each corresponding response. This reduces latency, as the client doesn't have to wait for one response before sending the next request. However, the responses must arrive in the same order as the requests, which can lead to a problem known as Head of Line Blocking.

- Example: If a client requests

index.htmlfirst and thenimage.png, the server must respond toindex.htmlbeforeimage.png, even if the image is ready to send first.

Chunked Transfer Encoding

Chunked transfer encoding is used when a server sends a large file and doesn't know the total size beforehand or is streaming content. This method allows data to be sent in chunks.

Transfer-Encoding: chunked // passed as the header in the response

How it Works in Video Streaming:

The response is broken into smaller chunks. Each chunk is preceded by its size in bytes, followed by a newline. The end of the message is indicated by a zero-sized chunk.

Client Awareness: Each chunk is read by the client, which uses the chunk's size to know how much data to expect. A zero-sized chunk indicates the end of the message.

Cache Control

Cache control headers manage how responses are cached by browsers and intermediate proxies, significantly improving performance by reducing repeated requests for the same resource.

Common Cache-Control Directives:

-

max-age: Specifies the maximum time a resource is considered fresh. For example, max-age=10 seconds means the cached resource can be used for 10 seconds without revalidation.

-

no-cache: Requires revalidation with the server before using a cached copy.

-

no-store: Prevents caching of the response.

-

public and private: public allows the response to be cached by any cache, while private restricts caching to the browser only.

HTTP 2

HTTP 2

HTTP/2 introduces significant improvements over HTTP/1.1, focusing on performance and efficiency.

Binary Protocol

HTTP/2 uses a binary format for data transmission instead of the textual format used by HTTP/1.x. This change makes the protocol more efficient and less error-prone, as binary data is easier and faster for computers to process.

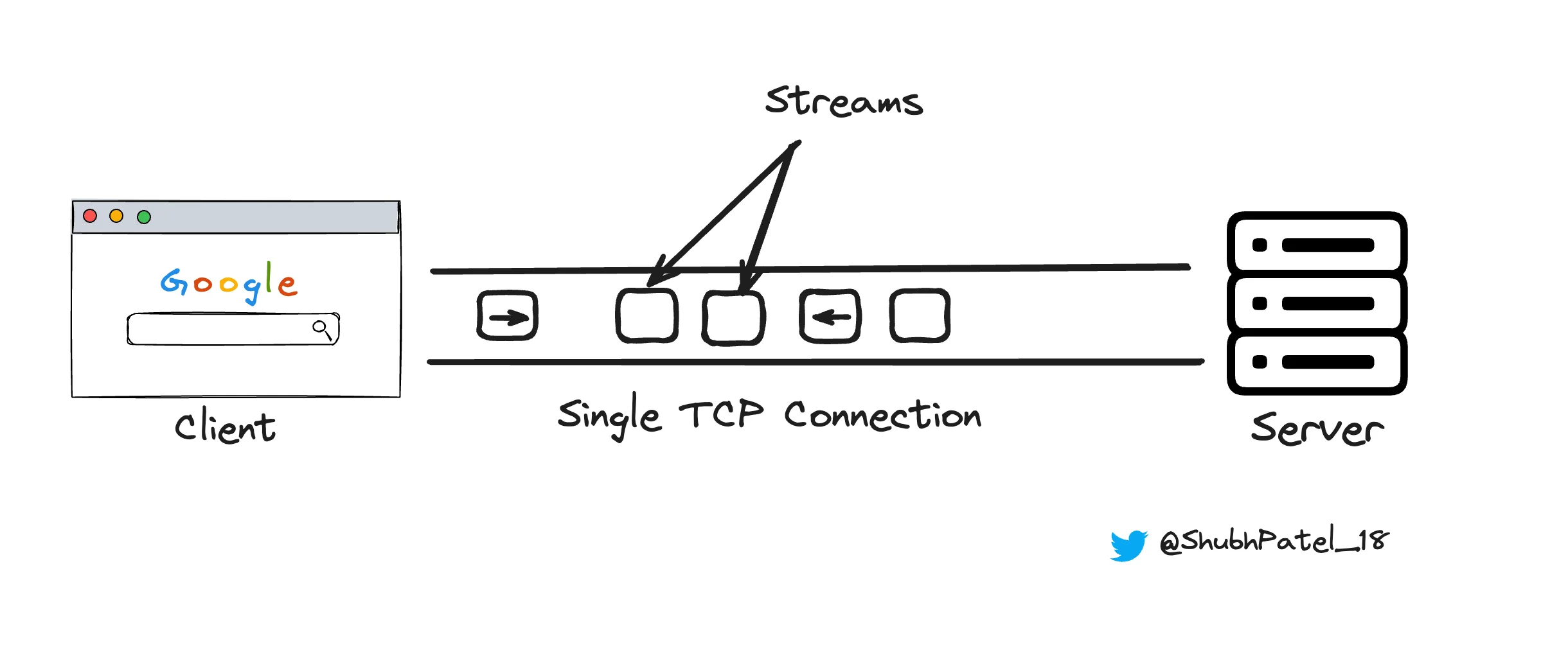

Multiplexing

Multiplexing allows multiple requests and responses to be sent simultaneously over a single TCP connection. This eliminates the Head of Line Blocking problem present in HTTP/1.x, where requests had to be processed sequentially.

Header Compression

HTTP/2 uses HPACK compression to reduce the size of headers, which can be quite large. Unlike HTTP/1.x, where headers are sent as plain text for each request, HTTP/2 compresses them, reducing overhead and speeding up communication.

Server Push

Server Push

Server Push allows the server to send resources to the client before the client explicitly requests them. This can significantly improve page load times by preemptively sending resources the client will need.

Example: When a client requests an HTML page, the server can push associated CSS and JavaScript files before the client asks for them, ensuring they're ready when needed.

Stream Prioritization

HTTP/2 allows clients to assign priority levels to different streams. This feature ensures that more critical resources are loaded first, enhancing the overall user experience by making essential content available sooner.

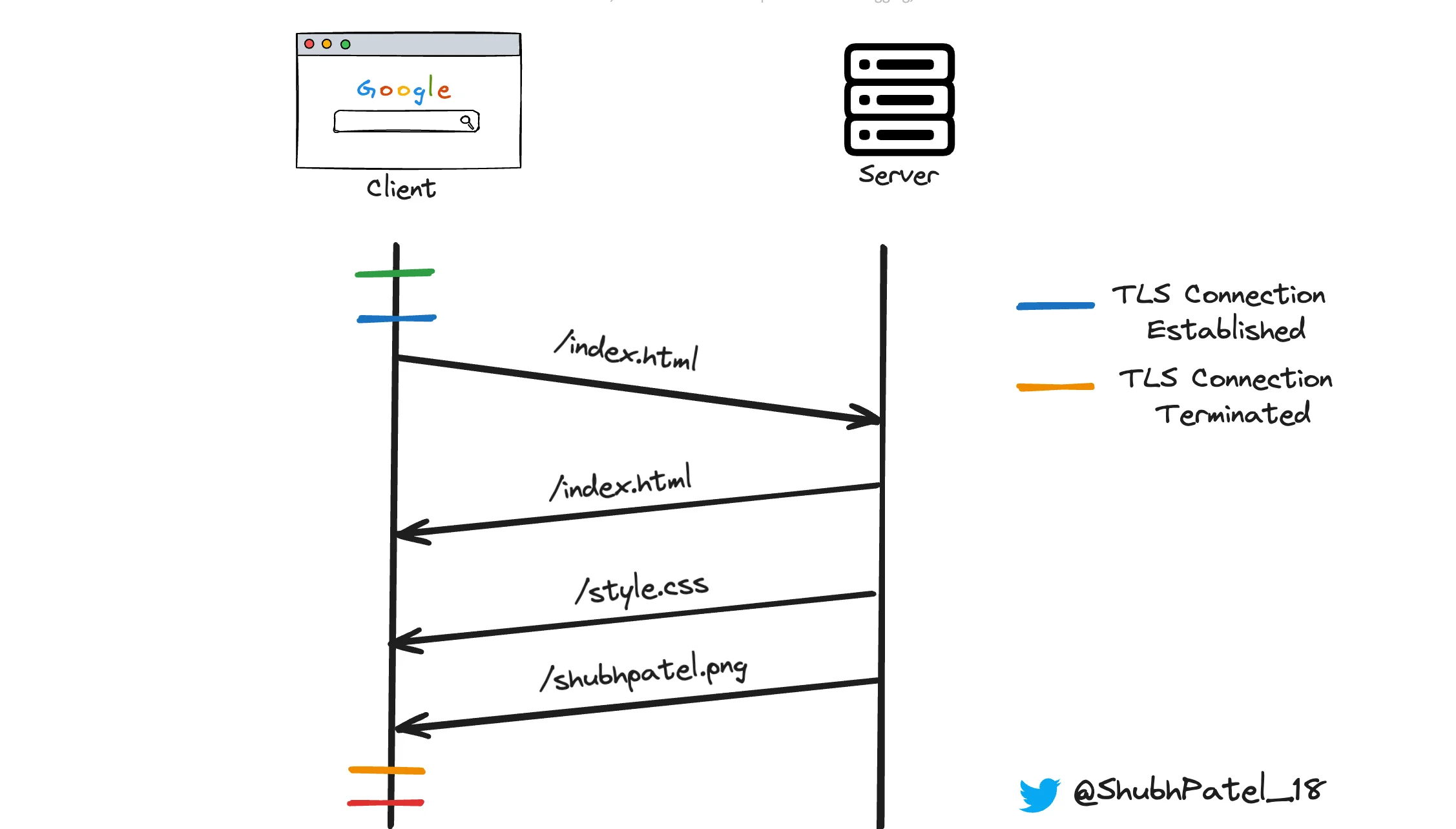

Improved Security

HTTP/2 is designed to work seamlessly with HTTPS, promoting secure connections by default. Many HTTP/2 implementations require encryption (TLS), enhancing the overall security of web communication.